import pandas as pd

from sklearn import datasets

import seaborn as sns

import skimpyThis notebook will use the Iris flower dataset from sklearn to introduce classification with Random Forest.

Feature importance and partial dependency plots will be created once the model is trained.

Overview

- Set up train-test data

- Review decision trees

- Visualize a decision tree

- Introduction to random forest

- Train and predict with a random forest

- Visualize feature importances

- Create partial dependency plots for random forest

- Knowledge check and questions

- Additional EDA & Visualizations

Prerequisites

- Python imports

- Train-test split

- Classification metrics

- Decision Trees

- Measures of node impurity (Shannon Entropy and Gini Index)

Learning Objectives

- Apply a random forest classifier to a dataset

- Visualize feature importances from a trained random forest model

Dependencies

This notebook was made with the following packages: 1. python=3.7.6 1. sklearn=1.2.2 1. matplotlib=3.1.3 1. pandas=1.0.1

Set up data set

data = datasets.load_iris()

df = pd.DataFrame(data.data, columns=data.feature_names)

df['target'] = data.target

df['label'] = df['target'].map({0: 'setosa', 1: 'versicolor', 2: 'virginica'})

df.head()| sepal length (cm) | sepal width (cm) | petal length (cm) | petal width (cm) | target | label | |

|---|---|---|---|---|---|---|

| 0 | 5.1 | 3.5 | 1.4 | 0.2 | 0 | setosa |

| 1 | 4.9 | 3.0 | 1.4 | 0.2 | 0 | setosa |

| 2 | 4.7 | 3.2 | 1.3 | 0.2 | 0 | setosa |

| 3 | 4.6 | 3.1 | 1.5 | 0.2 | 0 | setosa |

| 4 | 5.0 | 3.6 | 1.4 | 0.2 | 0 | setosa |

df.shape(150, 6)df.label.value_counts()label

setosa 50

versicolor 50

virginica 50

Name: count, dtype: int64Train-Test Split

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(

df[data.feature_names],

df['label'],

random_state=42,

stratify=df['label']

)print(X_train.shape)

print(X_test.shape)

print(y_train.shape)

print(y_test.shape)(112, 4)

(38, 4)

(112,)

(38,)Decision Tree Review

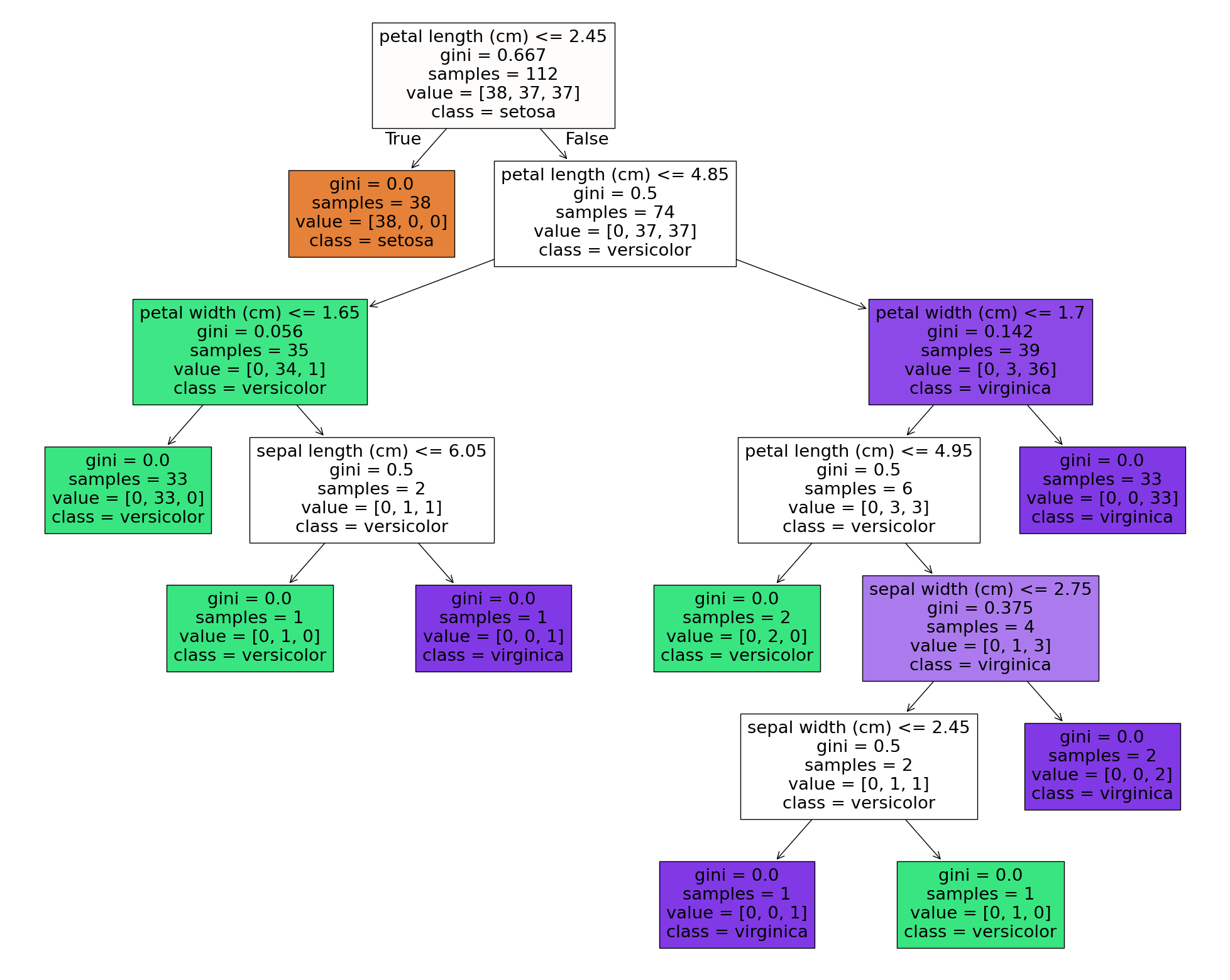

We will first build a decision tree model to review their structure before moving on to random forest classification

from matplotlib import pyplot as plt

from sklearn.tree import DecisionTreeClassifier

from sklearn import treedt = DecisionTreeClassifier(random_state=42)

dt.fit(X_train, y_train)DecisionTreeClassifier(random_state=42)In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook.

On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

DecisionTreeClassifier(random_state=42)

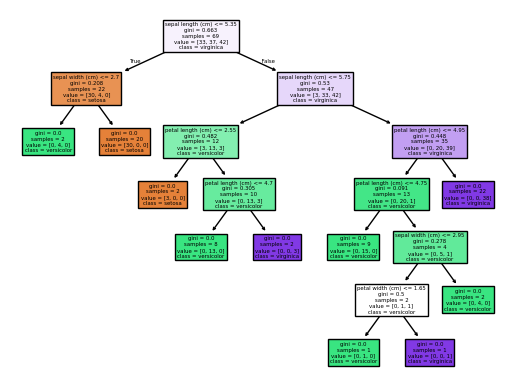

fig = plt.figure(figsize=(25,20))

_ = tree.plot_tree(dt,

feature_names=data.feature_names,

class_names=data.target_names,

filled=True)

predicted_labels = dt.predict(X_test)from sklearn.metrics import (

accuracy_score,

recall_score,

precision_score,

f1_score

)

def print_metrics(y_test, y_pred):

"""function to display model metrics in a human readable fashion

y_test: Array of true y values

y_pred: Arrah of predicted y values

"""

scores = [accuracy_score, recall_score, precision_score, f1_score]

s_labels = ['Accuracy', 'Recall', 'Precision', 'F1']

for score, s_label in zip(scores, s_labels):

if s_label == 'Accuracy':

print(f"{s_label}: {score(y_test, y_pred): .2f}")

else:

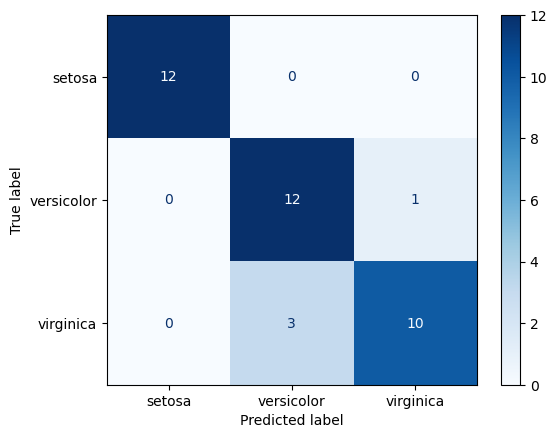

print(f"{s_label}: {score(y_test, y_pred, average='weighted'):.2f}")print_metrics(y_test, predicted_labels)Accuracy: 0.89

Recall: 0.89

Precision: 0.90

F1: 0.89from sklearn.metrics import ConfusionMatrixDisplay

cm_disp = ConfusionMatrixDisplay.from_predictions(y_true=y_test,y_pred=predicted_labels, cmap='Blues')

Decision Tree Knowledge

- Are decision trees deterministic?

- Yes they are deterministic. The best split will be found at each iterative step and will be used.

- How are decision trees split determined?

- Information gain or entropy reduction

- Are decision trees parametric?

- No, they are not parametric. Splits may differ in direction based on values.

- Decision trees often have high variance, why might that be?

- May split on wrong features or overfit to data.

From tree to forest

- How might multiple decision trees be leveraged to reduce variance?

- Create multiple classifiers and average the results. Multiple weak learners can ofter produce a strong learner.

- Would multiple deterministic decision trees be useful?

- Only if they were NOT deterministic.

- How could they not be deteministic?

- Bootstrapping data and limiting features at each step

Random Forest

- An ensemble method that combines many decision trees which have been given different subsets of the data and features to create a strong learner

- Decision made on majority vote

- Reduces variance and creates a non-deterministic model

- Generally use a large number of bushy trees

- Can get excellent performance with minimum tuning

Random Forest Example

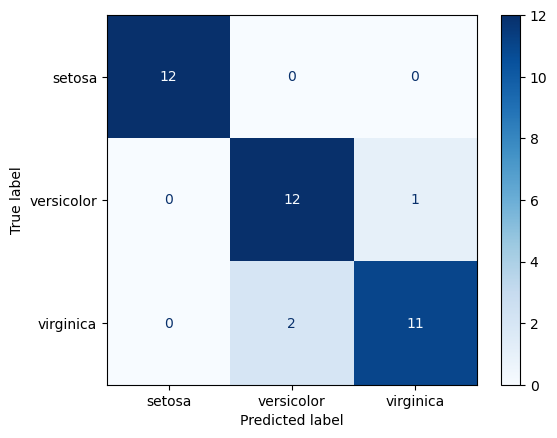

from sklearn.ensemble import RandomForestClassifier

rf = RandomForestClassifier(n_estimators=10, random_state=1)

rf.fit(X_train, y_train)

rf_preds = rf.predict(X_test)cm_disp = ConfusionMatrixDisplay.from_predictions(y_true=y_test, y_pred=rf_preds, cmap='Blues')

print_metrics(y_test, rf_preds)Accuracy: 0.92

Recall: 0.92

Precision: 0.92

F1: 0.92Visualizing trees in the forest

def plot_tree(est_num=0):

fn=data.feature_names

cn=data.target_names

tree.plot_tree(rf.estimators_[est_num],

feature_names = fn,

class_names = cn,

filled = True)plot_tree(est_num=0)

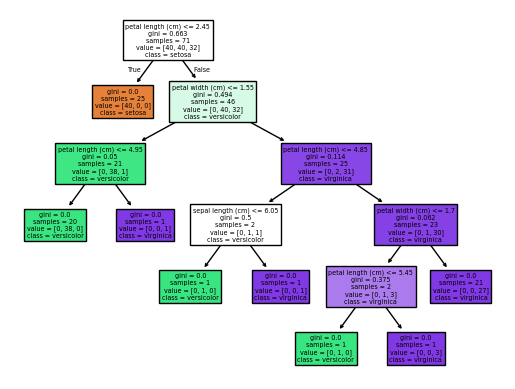

plot_tree(est_num=1)

Focusing only on the first split in the two trees above, we can see differences in the splits used to build the forest.

The first one split on sepal lenth <= 5.35 and the second on petal length <=2.45. The depth of the trees also varies. This is due to the randomness induced in the trees with bootstrapping and feature selection.

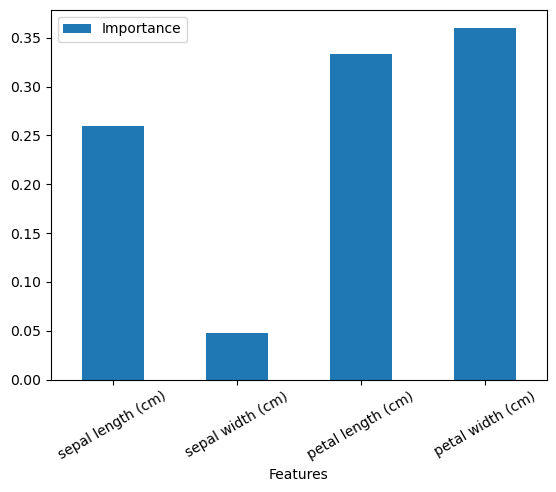

Random Forest Feature Importances

ft_import = pd.DataFrame()

ft_import['Features'] = data.feature_names

ft_import['Importance'] = rf.feature_importances_

ft_import| Features | Importance | |

|---|---|---|

| 0 | sepal length (cm) | 0.259131 |

| 1 | sepal width (cm) | 0.047562 |

| 2 | petal length (cm) | 0.332972 |

| 3 | petal width (cm) | 0.360335 |

# All importances sum to 1

ft_import.Importance.sum()np.float64(1.0)ft_import.plot.bar(x='Features', y='Importance', rot=30)

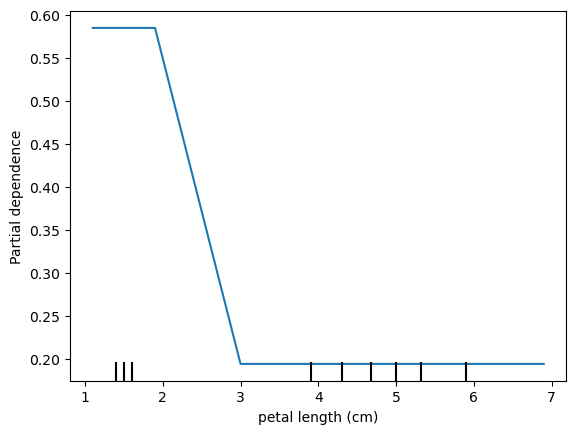

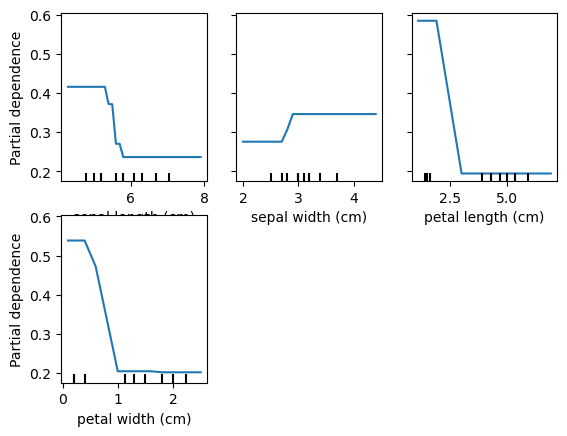

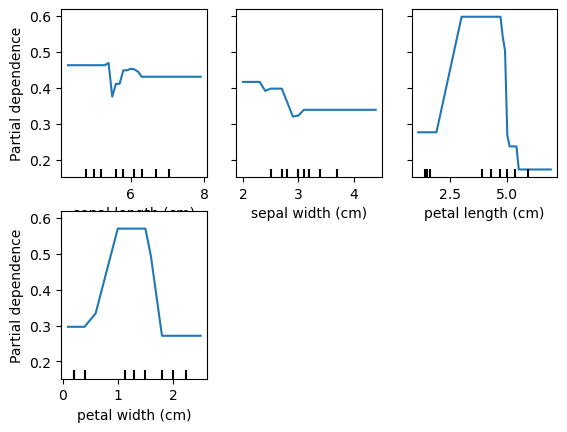

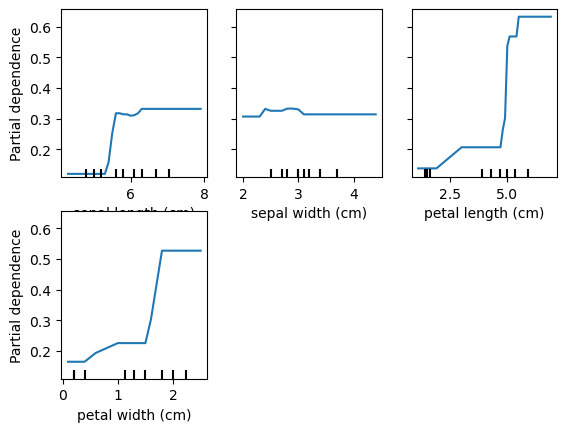

Partial Dependency Plots

Shows the marginal effect of a feature on predictions.

Shows effect of predictions when all observations have a feature set to a particular value.

from sklearn.inspection import PartialDependenceDisplay

pd_plot = PartialDependenceDisplay.from_estimator(rf, X_train, ['petal length (cm)'], target='setosa')

for target in data.target_names:

print('Target is: ', target)

# plot_partial_dependence(rf, X_train, data.feature_names, target=target)

PartialDependenceDisplay.from_estimator(rf, X_train, data.feature_names, target=target)Target is: setosa

Target is: versicolor

Target is: virginica

Advantages of Random Forests

- Ensemble model (Wisdom of the Crowd)

- Good out-of-box performance

- Multiple trees can be trained at once

Cons of Random Forests

- Expensive to train

- Can produce very large model files

Model Comparison and evaluation

- Which model performed better?

- Which feature had the most influence on the random forest model?

Knowledge Check

- A random forest is the same as combining many decision trees?

- Name two ways in which random forests are made non-deterministic.

- Random forest classifiers are parametric?

- Name two visuals to help intrepret random forest models.

Review Objectives

- Apply a random forest classifier to a dataset.

- Visualize feature importances from a trained random forest model.

Next Steps

- Tune the random forest classifier

- Number of estimators

- Criterion: default is gini, can also try entropy

- Max depth, min samples

- Number of features

- Create a random forest regressor and test on sklearn Boston housing data

- Compare to decision tree model

- Plot feature importances

- Create partial dependency plots

Full Day Activities

- Code random forest from scratch

- Code partial dependecy plot function from scratch

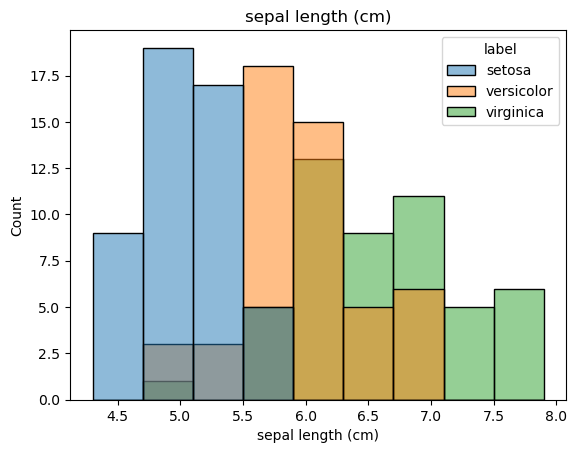

Additional EDA & Visualizations

skimpy.skim(df)╭──────────────────────────────────────────────── skimpy summary ─────────────────────────────────────────────────╮ │ Data Summary Data Types │ │ ┏━━━━━━━━━━━━━━━━━━━┳━━━━━━━━┓ ┏━━━━━━━━━━━━━┳━━━━━━━┓ │ │ ┃ Dataframe ┃ Values ┃ ┃ Column Type ┃ Count ┃ │ │ ┡━━━━━━━━━━━━━━━━━━━╇━━━━━━━━┩ ┡━━━━━━━━━━━━━╇━━━━━━━┩ │ │ │ Number of rows │ 150 │ │ float64 │ 4 │ │ │ │ Number of columns │ 6 │ │ int64 │ 1 │ │ │ └───────────────────┴────────┘ │ string │ 1 │ │ │ └─────────────┴───────┘ │ │ number │ │ ┏━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━┳━━━━━━━━┳━━━━━━━━━┳━━━━━━━━━━┳━━━━━━┳━━━━━━┳━━━━━━━━┳━━━━━━┳━━━━━━━┳━━━━━━━━━┓ │ │ ┃ column ┃ NA ┃ NA % ┃ mean ┃ sd ┃ p0 ┃ p25 ┃ p50 ┃ p75 ┃ p100 ┃ hist ┃ │ │ ┡━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━╇━━━━━━━━╇━━━━━━━━━╇━━━━━━━━━━╇━━━━━━╇━━━━━━╇━━━━━━━━╇━━━━━━╇━━━━━━━╇━━━━━━━━━┩ │ │ │ sepal length (cm) │ 0 │ 0 │ 5.843 │ 0.8281 │ 4.3 │ 5.1 │ 5.8 │ 6.4 │ 7.9 │ ▃██▇▅▂ │ │ │ │ sepal width (cm) │ 0 │ 0 │ 3.057 │ 0.4359 │ 2 │ 2.8 │ 3 │ 3.3 │ 4.4 │ ▁▇█▇▂▁ │ │ │ │ petal length (cm) │ 0 │ 0 │ 3.758 │ 1.765 │ 1 │ 1.6 │ 4.35 │ 5.1 │ 6.9 │ █ ▂▇▆▂ │ │ │ │ petal width (cm) │ 0 │ 0 │ 1.199 │ 0.7622 │ 0.1 │ 0.3 │ 1.3 │ 1.8 │ 2.5 │ █ ▂▆▄▄ │ │ │ │ target │ 0 │ 0 │ 1 │ 0.8192 │ 0 │ 0 │ 1 │ 2 │ 2 │ █ █ █ │ │ │ └────────────────────────┴─────┴────────┴─────────┴──────────┴──────┴──────┴────────┴──────┴───────┴─────────┘ │ │ string │ │ ┏━━━━━━━━┳━━━━┳━━━━━━┳━━━━━━━━━━┳━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━━━━━┳━━━━━━━━━━━━━┳━━━━━━━━━━━━━━┳━━━━━━━━━━━━━┓ │ │ ┃ ┃ ┃ ┃ ┃ ┃ ┃ ┃ chars per ┃ words per ┃ ┃ │ │ ┃ column ┃ NA ┃ NA % ┃ shortest ┃ longest ┃ min ┃ max ┃ row ┃ row ┃ total words ┃ │ │ ┡━━━━━━━━╇━━━━╇━━━━━━╇━━━━━━━━━━╇━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━━━━━╇━━━━━━━━━━━━━╇━━━━━━━━━━━━━━╇━━━━━━━━━━━━━┩ │ │ │ label │ 0 │ 0 │ setosa │ versicolor │ setosa │ virginica │ 8.33 │ 1 │ 150 │ │ │ └────────┴────┴──────┴──────────┴────────────┴────────┴───────────┴─────────────┴──────────────┴─────────────┘ │ ╰────────────────────────────────────────────────────── End ──────────────────────────────────────────────────────╯

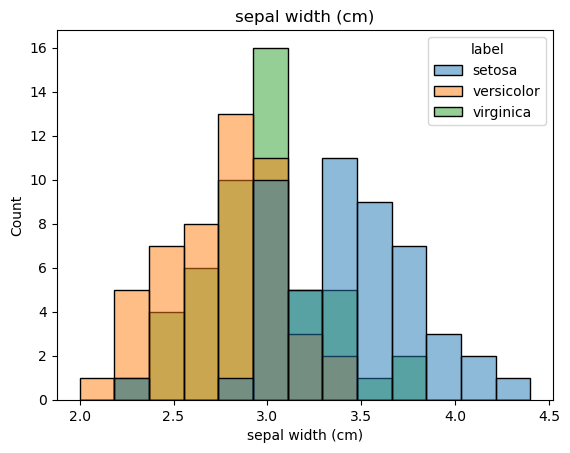

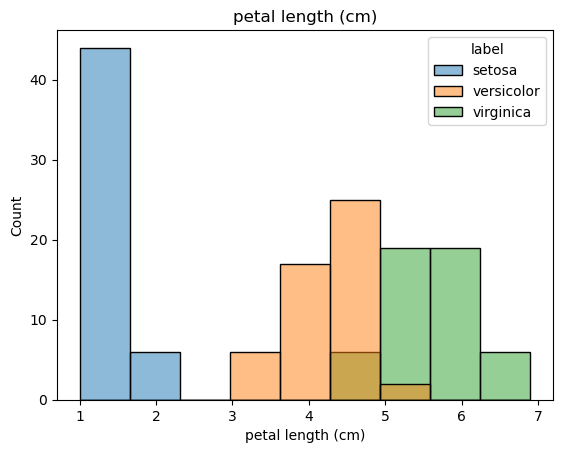

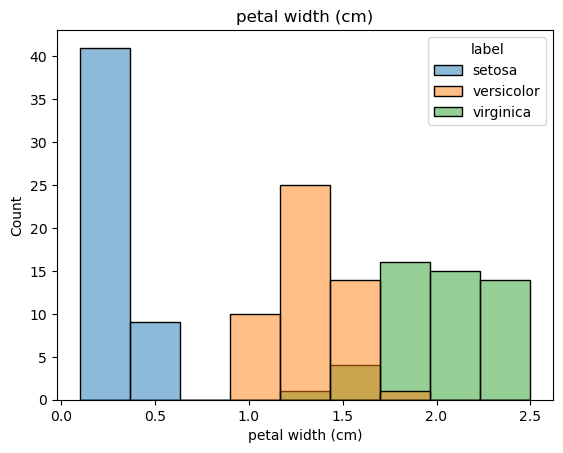

features = df.columns[:4]

featuresIndex(['sepal length (cm)', 'sepal width (cm)', 'petal length (cm)',

'petal width (cm)'],

dtype='object')for feat in features:

sns.histplot(x=df[feat], hue=df['label']).set(title=feat)

plt.figure()

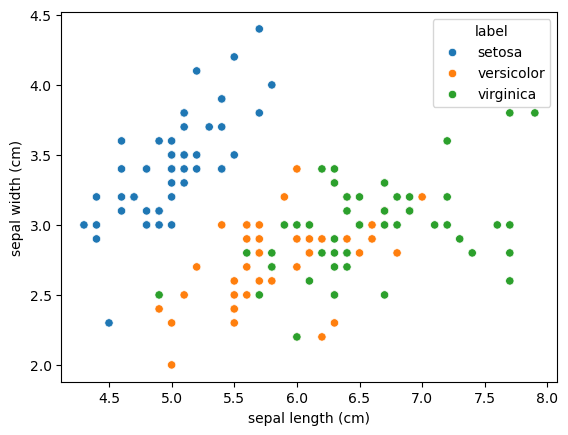

<Figure size 640x480 with 0 Axes>sns.scatterplot(data=df, x='sepal length (cm)', y='sepal width (cm)', hue='label')

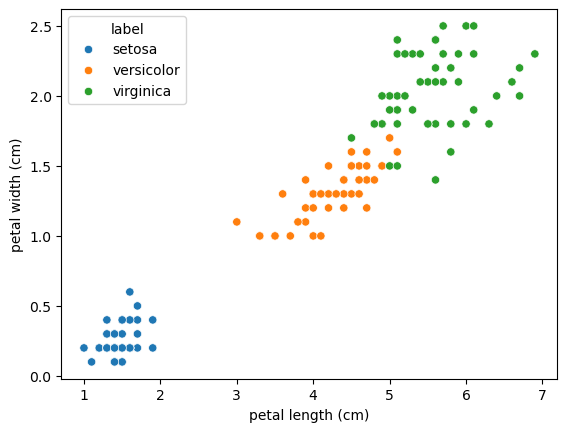

sns.scatterplot(data=df, x='petal length (cm)', y='petal width (cm)', hue='label')